Page 210 - AI Computer 10

P. 210

3 3 AI Model Evaluation

AI Model Evaluation

u Model Evaluation u Classifi cation Model Evaluation Terminologies

u Model Evaluation Techniques or Metrics

u Ethical Concerns Around Model Evaluation

Model Evaluation is a crucial part of the AI project cycle and it helps us measure the performance of a model and

possible changes to improve it. Evaluation refers to the process of understanding the reliability of any AI model

by feeding a test dataset that has not been used for training the model. After evaluating the performance of

the model, improvements can be designed and implemented to make the model more efficient, accurate, and

reliable.

MODEL EVALUATION

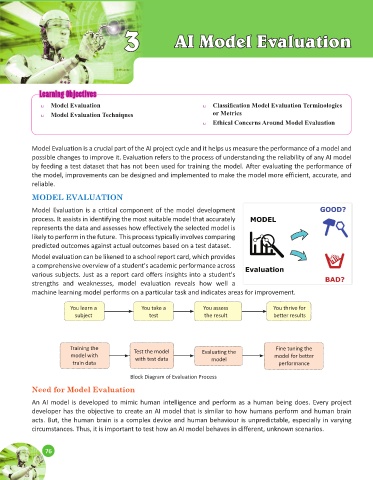

Model Evaluation is a critical component of the model development

process. It assists in identifying the most suitable model that accurately

represents the data and assesses how effectively the selected model is

likely to perform in the future. This process typically involves comparing

predicted outcomes against actual outcomes based on a test dataset.

Model evaluation can be likened to a school report card, which provides

a comprehensive overview of a student's academic performance across

various subjects. Just as a report card offers insights into a student's

strengths and weaknesses, model evaluation reveals how well a

machine learning model performs on a particular task and indicates areas for improvement.

You learn a You take a You assess You thrive for

subject test the result better results

Training the Test the model Evaluating the Fine tuning the

model with with test data model for better

train data model performance

Block Diagram of Evaluation Process

Need for Model Evaluation

An AI model is developed to mimic human intelligence and perform as a human being does. Every project

developer has the objective to create an AI model that is similar to how humans perform and human brain

acts. But, the human brain is a complex device and human behaviour is unpredictable, especially in varying

circumstances. Thus, it is important to test how an AI model behaves in different, unknown scenarios.

76

76